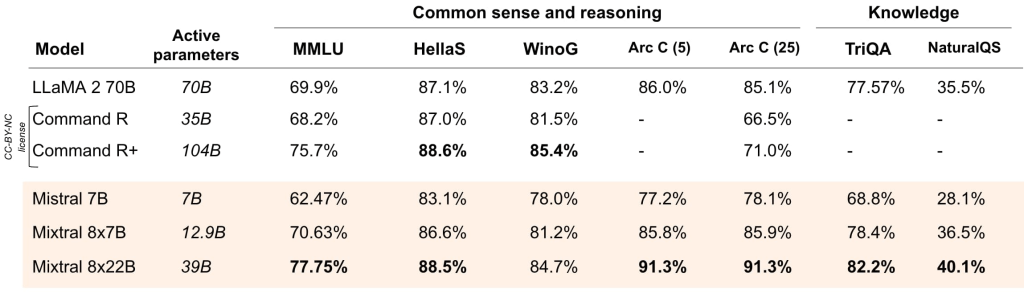

Mistral AI has released Mixtral 8x22B, which sets a new benchmark for open source models in performance and efficiency. The model boasts robust multilingual capabilities and superior mathematical and coding prowess.

Mixtral 8x22B operates as a Sparse Mixture-of-Experts (SMoE) model, utilising just 39 billion of its 141 billion parameters when active.

Beyond its efficiency, the Mixtral 8x22B boasts fluency in multiple major languages including English, French, Italian, German, and Spanish. Its adeptness extends into technical domains with strong mathematical and coding capabilities. Notably, the model supports native function calling paired with a ‘constrained output mode,’ facilitating large-scale application development and tech upgrades.