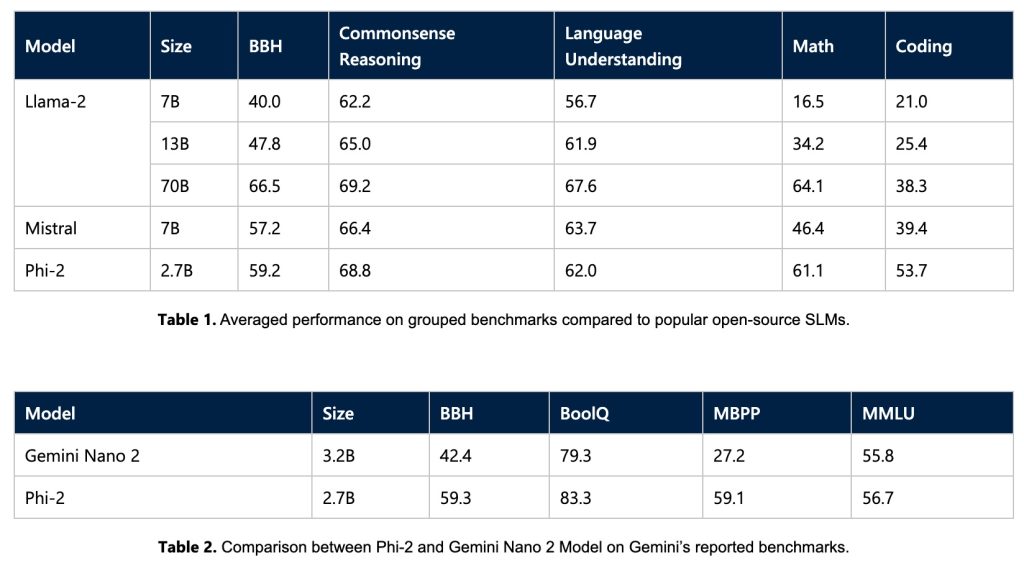

Microsoft’s 2.7 billion-parameter model Phi-2 showcases outstanding reasoning and language understanding capabilities, setting a new standard for performance among base language models with less than 13 billion parameters.

Phi-2 builds upon the success of its predecessors, Phi-1 and Phi-1.5, by matching or surpassing models up to 25 times larger—thanks to innovations in model scaling and training data curation.

The compact size of Phi-2 makes it an ideal playground for researchers, facilitating exploration in mechanistic interpretability, safety improvements, and fine-tuning experimentation across various tasks.

Phi-2’s achievements are underpinned by two key aspects:

- Training data quality: Microsoft emphasises the critical role of training data quality in model performance. Phi-2 leverages “textbook-quality” data, focusing on synthetic datasets designed to impart common sense reasoning and general knowledge. The training corpus is augmented with carefully selected web data, filtered based on educational value and content quality.

- Innovative scaling techniques: Microsoft adopts innovative techniques to scale up Phi-2 from its predecessor, Phi-1.5. Knowledge transfer from the 1.3 bi