Stability AI has introduced the latest additions to its Stable LM 2 language model series: a 12 billion parameter base model and an instruction-tuned variant. These models were trained on an impressive two trillion tokens across seven languages: English, Spanish, German, Italian, French, Portuguese, and Dutch.

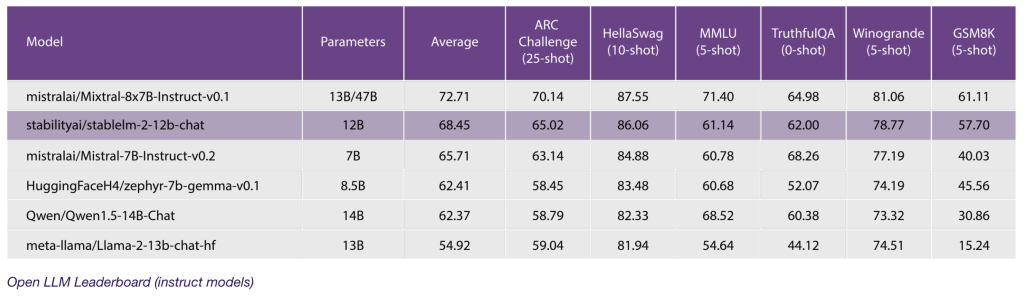

The 12 billion parameter model aims to strike a balance between strong performance, efficiency, memory requirements, and speed. It follows the established framework of Stability AI’s previously released Stable LM 2 1.6B technical report. This new release extends the company’s model range, offering developers a transparent and powerful tool for innovating with AI language technology.

Alongside the 12B model, Stability AI has also released a new version of its Stable LM 2 1.6B model. This updated 1.6B variant improves conversation abilities across the same seven languages while maintaining remarkably low system requirements.

Stable LM 2 12B is designed as an efficient open model tailored for multilingual tasks with smooth performance on widely available hardware.

According to Stability AI, this model can handle tasks typically feasible only for significantly larger models, which often require substantial computational and memory resources, such as large Mixture-of-Experts (MoEs). The instr